Tracing The Artificial Neural Networks From Scratch (Part-1)

Introduction

In this series of blog we will be demystifying the theory, mathematics and science behind the Artificial Neural Networks. We will be coding all the fundamental units of multi-layer feed forward neural network from scratch using python. This network is also known as multi-layer perceptron model. Since, there are different variants of a MLP Model, we will be focusing only on the Feed Forward Network. In, Feed Forward Network information flows only in the forward direction from the input layer to the output layer. We are going to discuss the whole process in full detail throughout the series.

Before we start, let's have a look at topics we are gonna cover in this blog.

1. Introduction and History of Artificial Neural Networks

2. The Rosenblatt's Perceptron

3. Implementation of Rosenblatt's Perceptron with Python

1. Introduction and History of Artificial Neural Networks:

A fundamental or central piece of a neural network is actually the artificial neuron. It all started back in 1943 when the first model of an artificial neurons was introduced by McCulloch and Pitts, which started the first wave of neural network research. Then the major contribution came in 1957 by the Rosenblatt's perceptron, who was a researcher at Cornell. We need to have a strong understanding of single working perceptron in order to get more familiarize with ANNs. Before we get into artificial perceptron, lets get to know it's biological roots.

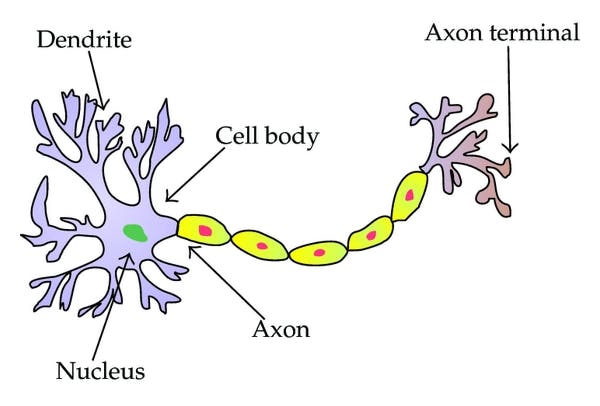

A biological neuron consists of one cell body, multiple dendrites, and a single axon. The connections between neurons are known as synapses. The neuron receives stimuli on the dendrites, and in cases of sufficient stimuli, the neuron gets activated and outputs stimulus on its axon, which is transmitted to other neurons that have synaptic connections to the activated neuron.

By definition

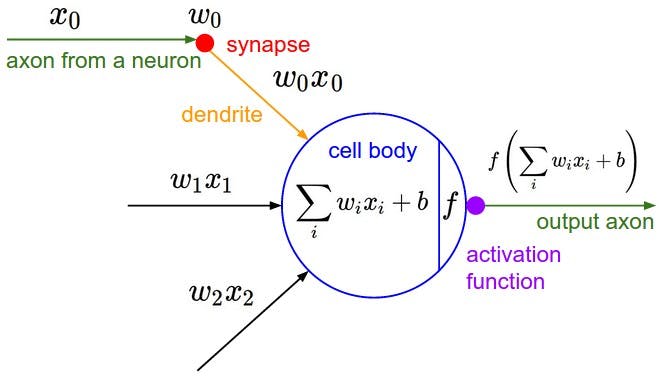

A perceptron is a type of artificial neuron. It sums up the inputs to compute an intermediate value z, which is fed to an activation function. The perceptron uses the sign function as an activation function, but other artificial neurons use other functions

Lets see the details.

2. The Rosenblatt's Perceptron

The artificial perceptron (Rosenblatt's Perceptron) consists of a computational unit, a number of inputs, each with an associated input weight and a single output. This is how it looks like:

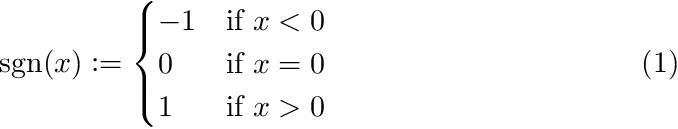

The inputs are typically named x0 , x1, . . ., xn in the case of n general inputs (x0 being the bias input), and the output is typically named y. The inputs and output loosely correspond to the dendrites and the axon. Each input has an associated weight (wi , where i = 0, . . ., n), which historically has been referred to as synaptic weight because it in some sense represents how strong the connection is from one neuron to another. The bias input is always 1. Each input value is multiplied by its corresponding weight before it is presented to the computational unit (cell body). The computational unit computes the sum of the weighted inputs and then applies a so-called activation function, y = f(z), where z is the sum of the weighted inputs. The activation function for the perceptron with which we will be starting is called as sign function (also known as the signum function). It evaluates to 1 if the input is 0 or higher and −1 otherwise. The activation function we are going to use looks like this:

So far we have seen its mathematical representation, now let's see how we can implement a single/multi input perceptron (with sign as an activation function) in python below.

3. Implementation of Rosenblatt's Perceptron with Python

The python function below simulates a single perceptron, which takes multiple inputs (in X - input vector) and their corresponding weights (W-vector) as function parameters, find their activity value (which is the sum of all inputs multiplied with their weights). This activity value is given to sign function which decide the output (either 0 or 1).

def perceptron(w, x):

# calculating activity value using weighted sum of inputs

activity = np.sum([wi * xi for xi, wi in zip(x, w)])

# sign activation function

if activity < 0:

return -1

else:

return 1

4. Summary

In this first blog of our Neural Network series we have discussed the following:

- ANNs structured is a simulation of biological neural networks and central point of this is a single perceptron.

- Rosenblatt came up with an artificial model of biological neurone that can act like a decision circuit, which we will expand further to make a learning algorithm

- Activation functions are the cell units to decide which information can cause the cell to get excited or activated.

- We can implement this basic computation unit as a basic python function which we will use later to make a fully connected network of nodes.